Abstract

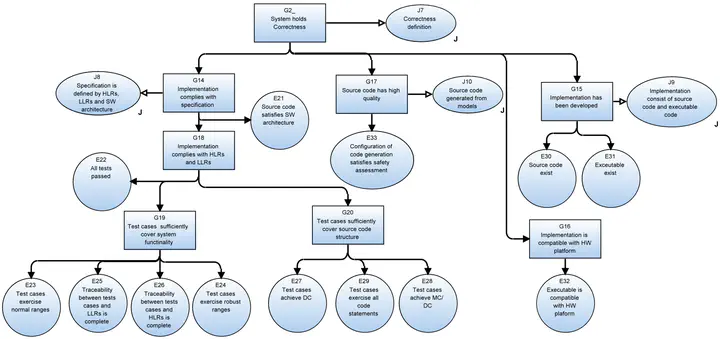

A certification process evaluates whether the risk of a system is acceptable for its intent. Certification processes are complex and usually human-driven, requiring expert evaluators to determine software conformance to certification guidelines based on a large number of development artifacts. These processes may result in superficial, biased, and long evaluations. In this paper, we propose a computer-aided assurance framework, called Automated Assurance Case Environment (AACE), enabling synthesis and validation of assurance cases (ACs) based on a system’s specification, assurance evidence, and domain expert knowledge captured in AC patterns. A commercial aerospace case study shows that the generated ACs are meaningful, and numerical results show the efficiency of AACE.