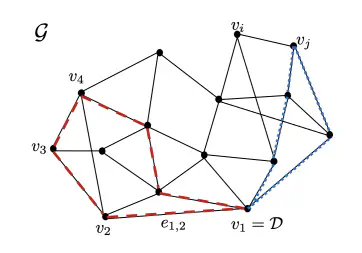

Graph G models tasks (nodes vi) and connections between tasks (edges e(i,j) ). A fleet of M agents initially at v1 = D jointly completes as many tasks as possible while without depleting capacities before returning to v1 = D. A task assignment for M = 2 agents is shown in red and blue

Graph G models tasks (nodes vi) and connections between tasks (edges e(i,j) ). A fleet of M agents initially at v1 = D jointly completes as many tasks as possible while without depleting capacities before returning to v1 = D. A task assignment for M = 2 agents is shown in red and blueAbstract

This paper introduces a novel data-driven hierarchical control scheme for managing a fleet of nonlinear, capacity-constrained autonomous agents in an iterative environment. We propose a control framework consisting of a high-level dynamic task assignment and routing layer and low-level motion planning and tracking layer. Each layer of the control hierarchy uses a data-driven Model Predictive Control (MPC) policy, maintaining bounded computational complexity at each calculation of a new task assignment or actuation input. We utilize collected data to iteratively refine estimates of agent capacity usage, and update MPC policy parameters accordingly. Our approach leverages tools from iterative learning control to integrate learning at both levels of the hierarchy, and coordinates learning between levels in order to maintain closed-loop feasibility and performance improvement of the connected architecture.